Listen to this Article

Chameleon's Tongue One Quick Snatcher

A chameleon's tongue is fast.

Some chameleons can snatch a cricket in 20 milliseconds; the average human blinks in about 300 milliseconds (on the quick end).

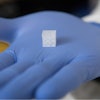

A team of researchers at Purdue's FlexiLab created high-speed prestressed soft actuators that mimic the chameleon's quick tongue using elastic energy. The robots are made of stretchable polymers, like rubber bands, only with internal pneumatic channels that expand when they are pressurized.

The prestressed pneumatic soft robot can expand five times its length, catch a live fly beetle and retrieve it, all in 120 milliseconds.

According to the researchers, the work could make future robots faster and more accurate.

And if that isn't cool enough, the team also mimicked the three-toed woodpecker to make a high-speed gripper and a Venus flytrap to make a soft robot that snaps closed in 50 milliseconds.

The new actuators could lead to advancements in robotics because they can grip and hold objects up to 100 times their weight without any external power.

Jumping Spiders Inspire New Sensors

Harvard researchers have created a new depth sensor inspired by the eyes of a jumping spider. It could soon be in everything from microbotics to wearable devices.

The tiny arachnids have incredible depth perception, which enables them to attack prey with great accuracy.

Researchers from the John A. Paulson School of Engineering and Applied Sciences (SEAS) have created a device that combines a multifunctional, flat metalens with an algorithm to measure depth in a single shot.

Current depth sensors rely on cameras or laser dots to measure and map depth. Humans have stereo vision, which means that we use information from both of our eyes to determine depth.

Jumping spiders are much more efficient. If a jumping spider looks at a fruit fly with one of its principal eyes, the fly will appear sharper in one retina's image and blurrier in another. This change in blur tells the spider how far away the fly is.

In computer vision, the calculation is referred to as depth from defocus, but until now, it has only been possible with large and slow cameras.

The new metalens captures images and produces a depth map using a computer vision algorithm. The color on the depth map represents object distance. The closer and farther objects are colored red and blue, respectively.

While this could lead to more powerful wearables and microbotics, let's hope that the new advances include robotic jumping spiders.

Researchers Tether Man & Machine

Humanity is one of the biggest concerns with autonomous humanoid robots. What if they aren't human enough?

A team of researchers from the University of Illinois and MIT has developed a way to keep a human in the robot by tethering the two together.

The researchers have created Little Hermes. Right now, it's just a small robot torso with legs but is operates simultaneously with a human counterpart.

The small-scale bipedal robot could one day be deployed into situations deemed too dangerous for humans.

The researchers were inspired by the Fukushima nuclear plant disaster in 2011. While humans couldn't intervene as the disaster was unfolding, a humanoid robot could've helped prevent the nuclear meltdown that is still rippling throughout the world today.

The idea of human-piloted robots isn't new, but this is one of the first times that the human feels the same forces that the robot experiences.

The researchers created a motion-captures suit that acts as an exoskeleton. The human uses the suit to move the robot, but also feel what the robot feels.

The synchronicity would also help stabilize the robot.

To prove the feedback force, the researchers struck Little Hermes with a mallet -- and its human counterpart felt the impact as well.

Next, the researchers are hoping to make Little Hermes wireless and create robot-to-human force feedback devices for other parts of the body.