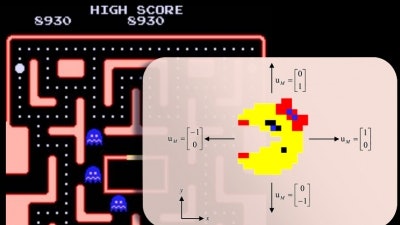

Using a novel approach for computing real-time game strategy, engineers have developed an artificial Ms. Pac-Man player that chomps the existing high score for computerized play.

In the popular arcade game, Ms. Pac-Man must evade ghost enemies while she collects items and navigates an obstacle-populated maze. The game is somewhat of a favorite among engineers and computer scientists who compete to see who can program the best artificial player.

The record score at the annual Ms. Pac-Man Screen Capture Competition stands at 36,280, but a trio of researchers led by Silvia Ferrari, professor of mechanical and aerospace engineering at Cornell, has produced a laboratory score of 43,720.

The score was achieved using a decision-tree approach in which the optimal moves for the artificial player are derived from a maze of geometry and dynamic equations that predict the movements of the ghosts with 94.6-percent accuracy. As the game progresses, the decision tree is updated in real-time.

The strategy is detailed in the study “A Model-Based Approach to Optimizing Ms. Pac-Man Game Strategies in Real Time,” to be published by the journal IEEE Transactions on Computational Intelligence and AI in Games.

“The novelty of our method is in how the decision tree is generated, combining both geometric elements of the maze with information-gathering objectives,” said Ferrari, who noted that the information in this case is the fruit Ms. Pac-Man collects for bonus points. Her team is the first to mathematically model the game’s components, whereas previous artificial players were developed with model-free methods.

Engineers take an interest in artificial players because they provide a benchmark challenge for developing new computational methods that can be applied to practical needs such as surveillance, search-and-rescue and mobile robotics.

“Engineering problems are so complicated, they’re very difficult to translate across applications. But games are very understandable and can be used to compare different algorithms unambiguously because every algorithm can be applied to the same game,” Ferrari said.

What began as such an exercise became a spectacle in 1996 when Deep Blue, a chess-playing computer developed by IBM, defeated world champion Garry Kasparov in their first match. However, it took Deep Blue 11 more matches to defeat Kasparov again.

Ferrari’s Ms. Pac-Man player faces its own challenges against human players. The study found that the artificial player was not able to average better scores or produce higher scores against humans who routinely played the game.

“It’s very interesting which problems are easier for humans and which are easier for computers,” said Ferrari. “It’s not completely understood right now what elements of a problem allow humans to outperform computers and it is a question we are investigating with neuroscientists through collaborative projects supported by the Office of Naval Research and the National Science Foundation.

“In the case of Ms. Pac-Man, our mathematical model is very accurate, but the player remains imperfect because of an element of uncertainty in the decisions made by the ghosts.”

However, Ferrari’s model did produce better scores than beginners and players with intermediate skills. The artificial player also demonstrated that it was more skilled than advanced players in the upper levels of the game where speed and spatial complexity become more challenging.

While the Ms. Pac-Man Screen Capture Competition is now on indefinite hiatus, Ferrari said she may still revisit the project and improve the artificial player by adding a component that would allow it to autonomously learn from its mistakes as it plays more games.

The study is co-authored by Greg Foderaro, a staff engineer at Applied Research Associates, and Ashleigh Swingler, an engineering doctoral student at Duke University.