SAN FRANCISCO (AP) — How long does it take a robotic hand to learn to juggle a cube?

About 100 years, give or take.

That's how much virtual computing time it took researchers at OpenAI, the non-profit artificial intelligence lab funded by Elon Musk and others, to train its disembodied hand. The team paid Google $3,500 to run its software on thousands of computers simultaneously, crunching the actual time to 48 hours. After training the robot in a virtual environment, the team put it to a test in the real world.

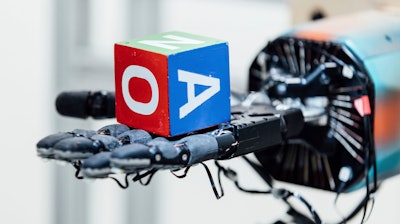

The hand, called Dactyl, learned to move itself, the team of two dozen researchers disclosed this week. Its job is simply to adjust the cube so that one of its letters — "O," ''P," ''E," ''N," ''A" or "I'' — faces upward to match a random selection.

Ken Goldberg, a University of California, Berkeley robotics professor who isn't affiliated with the project, said OpenAI's achievement is a big deal because it demonstrates how robots trained in a virtual environment can operate in the real world. His lab is trying something similar with a robot called Dex-Net, though its hand is simpler and the objects it manipulates are more complex.

"The key is the idea that you can make so much progress in simulation," he said. "This is a plausible path forward, when doing physical experiments is very hard."

Dactyl's real-world fingers are tracked by infrared dots and cameras. In training, every simulated movement that brought the cube closer to the goal gave Dactyl a small reward. Dropping the cube caused it to feel a penalty 20 times as big.

The process is called reinforcement learning. The robot software repeats the attempts millions of times in a simulated environment, trying over and over to get the highest reward. OpenAI used roughly the same algorithm it used to beat human players in a video game, "Dota 2."

In real life, a team of researchers worked about a year to get the mechanical hand to this point.

Why?

For one, the hand in a simulated environment doesn't understand friction. So even though its real fingers are rubbery, Dactyl lacks human understanding about the best grips.

Researchers injected their simulated environment with changes to gravity, hand angle and other variables so the software learns to operate in a way that is adaptable. That helped narrow the gap between real-world results and simulated ones, which were much better.

The variations helped the hand succeed putting the right letter face up more than a dozen times in a row before dropping the cube. In simulation, the hand typically succeeded 50 times in a row before the test was stopped.

OpenAI's goal is to develop artificial general intelligence, or machines that think and learn like humans, in a way that is safe for people and widely distributed.

Musk has warned that if AI systems developed only by for-profit companies or powerful governments, they could one day exceed human smarts and be more dangerous than nuclear war with North Korea.